A gentle introduction of evaluation techniques for LLM-applications

LLMs are often prone to exaggerating or generating a correct-sounding answer. Groundedness is about ensuring that the generated responses strictly adhere to the factual information.

As a Data Scientist, I've witnessed the rapid evolution and growing influence of Large Language Models (LLMs) in various applications, from autonomous task completions to AI-driven chatbots. There are several challenges that I face when integrating LLMs into my production workflows. Here are some of them:

Challenges

Hallucination in LLMs

Hallucination is one of the most intriguing and challenging aspects of working with Large Language Models (LLMs). When LLMs generate information or answers that are factually incorrect or nonsensical, they are said to be hallucinating. This can be particularly problematic in applications where accuracy is critical, such as healthcare or legal advice. Hallucinations can arise from biases in the training data, insufficient grounding in real-world knowledge, or simply the probabilistic nature of how these models generate text.

Rate-Limit for LLMs

Another challenge when deploying LLMs is the rate-limiting imposed by providers. Rate-limits are restrictions on the number of requests or tokens that can be processed by an LLM within a specific timeframe. However, rate-limits can hinder real-time applications that require rapid processing or high-volume queries.

Choice of LLM in Terms of Cost and Performance

Choosing the right LLM for a specific application involves a delicate balance between cost and performance. While high-performance models like GPT-4 or Claude offer advanced capabilities and better contextual understanding, they also come with significant computational costs. On the other hand, smaller models like GPT-3.5 or open-source alternatives may provide adequate performance according to desired level of accuracy but needs rigorous evaluation.

The remedy

A robust evaluation system is essential because it ensures that the tools, models, or processes being developed meet their intended objectives and perform reliably under various conditions.

Having a comprehensive evaluation system in place is crucial for achieving long-term success and sustainability of the solution-system.

Components of eval-process

Following are the components of the Evaluation process:

Dataset

Evaluaters

The application (LLM powered: Agents / Agentic-RAG / Agentic Task Completions)

Dataset

The dataset is the foundation of the evaluation process, providing the input data that LLM-powered applications will process and respond to. A high-quality dataset should be representative, diverse, and unbiased to ensure the evaluation accurately reflects the model's real-world performance. It is a topic in itself for another article.

Evaluators

Evaluators are the individuals or systems responsible for assessing the performance of the application against predefined metrics and criteria. They play a crucial role in interpreting results, providing feedback, and identifying areas for improvement. More on this coming shortly.

Applications (LLM Powered)

The application represents the LLM-powered system being evaluated, which may include agents, agentic retrieval-augmented generation (RAG), or agentic task completion systems. Evaluating these applications involves assessing their ability to understand context, execute tasks, interact effectively, and deliver accurate and relevant results in alignment with their intended use cases.

Let us explore the Evaluators. There are mainly three types of evaluators:

Evaluators:

Human

Hurestic

LLM-Evaluators

Human evaluators bring contextual understanding and nuanced judgment. They can provide qualitative insights, such as assessing the appropriateness and tone of responses. On the other hand, human evaluation can be time-consuming and costly, especially for large datasets.

Heuristic evaluators are rule-based and can quickly assess specific, well-defined criteria, making them efficient for initial evaluations but heuristic methods may lack the flexibility to handle unexpected or nuanced scenarios. They can miss contextual subtleties and complex interactions that human evaluators might catch. However they can be a good candidate for Code-Generation tasks covering unit-test, execution-test etc. This is a very interesting topic and a separate blog post will be on this topic.

LLM-Evaluators are systems where LLMs act as the judges. They use LLMs to score system output based on predefined grading rules or criteria encoded in the LLM prompt. They can operate reference-free, such as checking if the system output contains offensive content or adheres to specific criteria, or by comparing task output to a reference, such as verifying factual accuracy relative to a known reference. Using Agent based evaluators it is possible to evaluate more sophisticated scenarios.

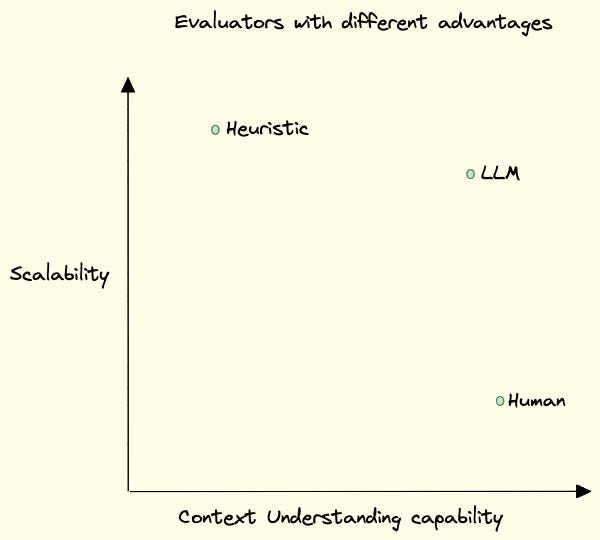

There are some trade off while choosing any of the above evaluators which is depicted in the figure.

Evaluation methods

There are mainly two forms of evaluation method:

Comparison

Scoring

In the Comparison method, LLM-evaluators compare the generated outputs to reference outputs to assess their quality and accuracy. This approach can help determine how well the system output aligns with expected results, providing a deeper evaluation of the model's performance. A variation of this powerful method is used to train RLHF (Reinforcement Learning with Human Feedback).

In the Scoring method, LLM-evaluator scores a system output based on predefined grading criteria such as verifying factual accuracy relative to a known reference. Particularly in RAG (Retrieval Augmented Generation) architecture this is very useful - where it’s important to score different phases of retrieval and generation to determine the overall quality of the output. In a broad scale there are three phases of RAG evaluation:

Context relevance

Groundedness

Answer relevance

What is Context relevance? At the Retrieval phase we gather some contextual-information related to the question from available sources (Find more about RAG here). This contextual information needs to be evaluated. A LLM-evaluator evaluates this with some clever-written-prompt and generates a score based on evaluation. This score is called relevance score. There are a lot of techniques to improve these scores - which can be a subject of another blog post.

Groundedness is about ensuring that the generated responses strictly adhere to the factual information provided, avoiding any exaggeration or unwarranted expansion. After the context is retrieved, it is then used to form an answer by an LLM. LLMs are often prone to stray from the facts provided, exaggerating or expanding to a correct-sounding answer. An LLM evaluates this deviation. It also evaluates overall relevance between contextual-information and generated answers and finally scores them.

Answer relevance is the relevance between the input question and the final answer which is also scored by an LLM-evaluator.

Apart from these, there are even more evaluations that can be implemented like embedding distance, toxicity, jailbreaks, sentiment, language mismatch, conciseness and coherence. I will be back with more detailed analysis on the different types of relevance evaluation, tools and my feedback on them in future posts.